When combatting cognitive bias in market research, it is beneficial to look at tools within intelligence analysis, particularly the Analysis of Competing Hypotheses

Analysis has a fundamental problem, and that problem is us. Humans aren’t naturally good at it. Cognitive biases are a natural part of our thinking, and no one can avoid them. Humans are good at snap judgments and those are usually right. We struggle with rationally evaluating evidence in a fair way, especially in qualitative research and analysis, where the thinking processes used for snap judgments don’t always apply.

To better understand cognitive bias in market research, it is beneficial to understand how it has been addressed in other sectors. Government intelligence agencies historically have led the way in developing techniques to improve analysis and reduce bias. These techniques generally are focused on human thinking writ large but can be applied to practices within market research.

How to combat cognitive bias in market research

Intelligence analysis, be it geopolitical, military or otherwise, and market research could essentially be considered the same craft with different subject matters. Both have the same goal: to inform a client (either internal or external) and reduce uncertainty, enabling them to make better decisions. Both rely on imperfect information—often in fields which are not easily quantifiable—and both rely on analysts to make judgement calls to interpret available data and come to accurate conclusions about gaps in the information.

Market research methods for gathering data are refined and discussed continually, yet the interpretation of that data rarely is mentioned. The intelligence community has decades of experience facing congressional hearings when they get it wrong, but market researchers often only have a frustrated client who will not communicate why they didn’t award the next contract. In addition, both are influenced by cognitive biases that can, and do, impact the analysis and the conclusions drawn. These biases usually are neither intentional nor malicious but are the work of cognitive shortcuts the human brain takes (those “snap judgments”). For more background on cognitive bias, David McRaney’s blog, You Are Not So Smart, is an excellent introduction and Richards Heuer’s book, “Psychology of Intelligence Analysis” (free online), are just a few incredible, in-depth look at these issues.

Analysis of Competing Hypotheses

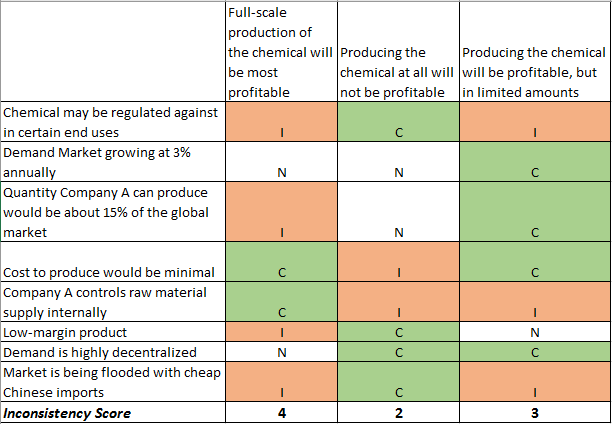

One of the simplest techniques to combat cognitive bias is to conduct an Analysis of Competing Hypotheses (ACH). Developed by Heuer (a Central Intelligence Agency veteran), ACH consists of a matrix with possible hypotheses (or scenarios) across the top, with each individual piece of evidence or information going down the side. ACH can be done with an unlimited number of scenarios, be it 10 hypotheses or hundreds.

The most important point is that each piece of evidence must be evaluated individually against each hypothesis, and marked as “consistent,” “neutral,” or “inconsistent.” By going across rather than down the matrix, it helps analysts to think critically about each piece of evidence, as opposed to thinking about each hypothesis. This method can generate a numeric score by tallying “inconsistent” ratings, but the analyst needs to be able to make a judgement call regarding the importance of each piece of information. Although it can be applied to quantitative and qualitative research, this method itself is NOT a quantitative method. Ultimately, each hypothesis is evaluated by how much “inconsistent” evidence is in its column, and this is compared to the analyst’s prior judgments. This method is best used to identify hypotheses which are problematic, not to diagnose a hypothesis which is most likely.

Analysis of Competing Hypotheses has three main benefits:

- It creates an analytical paper trail. The analyst, if challenged by a client or supervisor, has a visual tool to help explain their reasoning. In the event they are wrong, it provides evidence as rationale for the conclusions drawn.

- It facilitates discussion. ACH is used best in a team environment, where each piece of evidence rated against a hypothesis is debated by multiple people.

- Finally, it forces an analyst to confront their own biases. When a favored hypothesis has more inconsistencies than others, the analyst is forced to give a thorough explanation of why they think that hypothesis is right or rethink the situation.

Analysis of Competing Hypotheses matrix example

Below is a simple example of an ACH matrix done using Excel. This example is loosely based on work that Martec did for a chemical company that was interested in producing another chemical using the by-products of another process at the same facility. It serves to demonstrate the basic concept; it is not a complete/detailed example.

The original hypothesis was that full-scale production would be the best course of action, as the company controlled the raw materials and inputs at a minimal cost. What ACH showed was that too much weight was given to those factors without considering a multitude of other evidence, suggesting that we may have been clinging too tightly to our initial impressions (also called “anchoring bias”).

ACH, at its core, is a technique designed to reduce bias. Humans naturally draw conclusions and subconsciously favor or support such conclusions. To combat this: try an ACH matrix. Opening this dialogue merely strengthens the analysis process and facilitates richer conversations between analysts, supervisors, and clients.

For help combatting biases in your next research project, contact us. We’re here to help decision makers move forward with the utmost confidence.